Data Acquisition

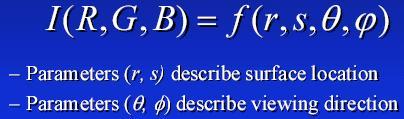

A surface map must first be defined before a quantitative analysis

of the specific imaging features can be derived. A surface map model simply describes the radiance values

of every point on a measured surface from every possible direction.

Trying to sample "every" point for a surface range would imply an infinite process, thus approximations

of certain data ranges must be calculated for performance to remain at acceptable levels during real-time

rendering. This compression process yields the final data, which is stored as two dimensional texture maps

which can be processed through multi-textured blending operations to render the final light mapped image.

Gathering the information for surface light field data can be carried out through either of two processes.

Synthetic imaging is achieved through the usage of pre-generated data sets for the required input values.

Physical objects require a more complex acquisition routine. Geometry is first scanned with aid from a structured

light gathering system as used by professionals for real-world 3D modeling. Then 200 to 400 images must be

taken from various fixed points to acquire the surface texture features. The data is then combined by a complex

registration routine that matches the geometric and photo data sets to build an input dataset.

Resampling and Normalization

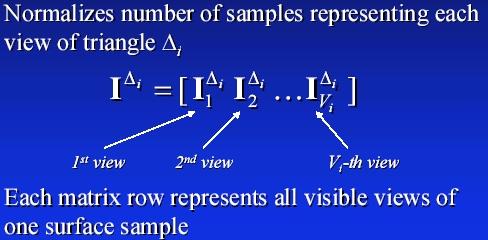

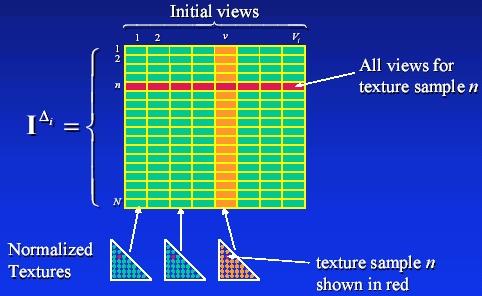

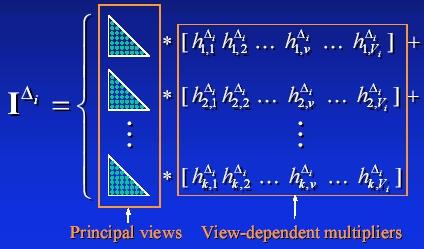

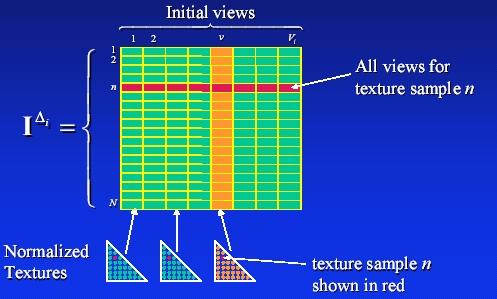

Next, the input dataset is processed through a visibility algorithm to determine un-occluded views. The view-set

is then decomposed through resampling and normalization into corresponding equal size triangle patterns while retaining

characteristics for observation from multiple viewpoints for the texture being rendered. While being computationally

intensive, the resamping process can generally be processed by standard texture mapping hardware conventions.

A texture normalization method is utilized to build initial viewing patterns for later synthesis of associated

rendered viewpoints.

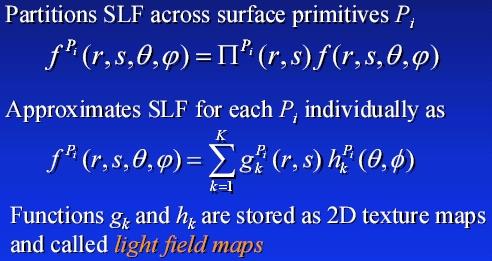

In other suggested light mapping routines, an approximation of texture ranges is now employed as determination

of an infinite number of viewpoints is not possible due to both hardware and real-world mathematical limitations.

The process presented above is referred to as the Eigen-Texture Approach.

Viewpoint synthesis is carried out to determine the aspects of both original and novel views. Original views

are easily determined, though novel views require extremely complex interpolation operations while performing texturing

blending, thus limiting the effectiveness of real-time rendering. The Eigen-Texture Approach also leads to

image artifacts since this formula assumes a constant viewing angle per triangle without the capability of per

sample interpolation.

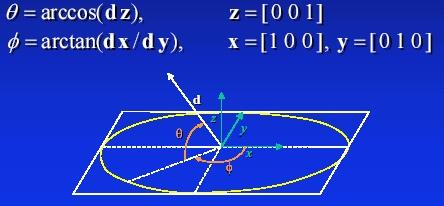

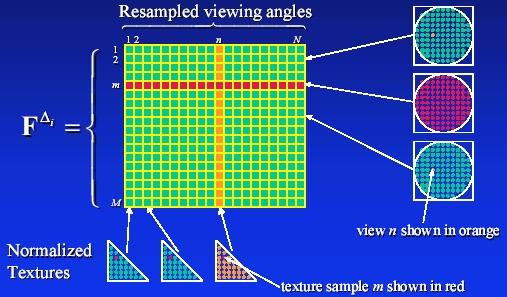

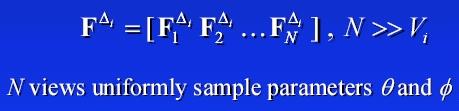

Intel has a defined a better approach through the resampling of viewing directions through direction parameterization.

Viewing direction resampling offers the ability to simply the synthesis of novel views. This is accomplished

by enabling per sample interpolation during the triangle rendering process, plus a new approximation pattern can

be employed that allows for minimal image artifacting since root mean square errors can be minimized. Also,

resampling can be performed through 3D hardware acceleration unlike the Eigen-Texture Approach. The question

now is what type of parameterization operation should be utilized?

Parameterization according to spherical coordinates is possible, though this technique would not prove suitable

for hardware acceleration due to limitations with available technology.

Parameterization through orthographic projection within the (x,y) plane is best suited for hardware acceleration

as the values can be expressed in terms of a two dimensional space.

Now the viewing directions must be projected for visible triangle views. Then the triangulation of the original

views must be established. Next, a dataset composed of a grid of regular viewpoints is derived through the

blending of original views.

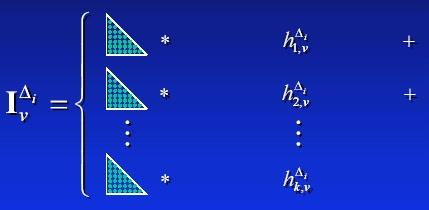

Referencing the original normalization formula that was presented earlier, the radiance data after sampling can

be included.

The available data for the associated viewing directions can also be included to arrive at a combined formula to

represent radiance across the range of resampled textures. |